Couple months ago I wrote about the release of updated flow of processing players on the server that could be activated with

UsePlayerUpdateJobs 2. It's key aspect is that it tries to leverage multithreading to speed up stagers of logic. The highlights are:

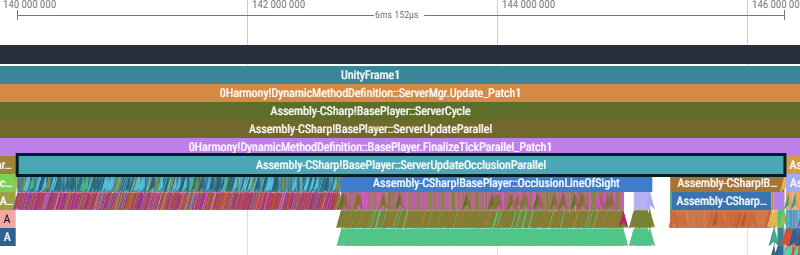

- We can run server occlusion in parallel

- We can send entity snapshots and entity destroy messages in parallel

- Only main thread serializes entity snapshots, the cached snapshots get consumed by worker threads

I've ran a bunch of tests on our servers and discovered that it's been a mixed bag of results - on fresh servers it showed good results, but on older servers it would show no benefit at all. I've investigated and discovered the following problems that have been addressed in this release.

Similar to last time, I'm hoping to validate the new mode and once confirmed that it's holding, roll it out to all official servers.

Server Occlusion - too much work

In retrospect, I've already mentioned in the old blog post how 350 players can cause 10k of various checks to be run, I just assumed that we'd be able to sift through them quick. But that's not the case:

In the above view we do a lot of small checks that usually are cheap, but we have so many pairs to check (~2.7k in above 330 player snapshot) that it becomes expensive. The way we gather pairs was inefficient - we could generate an (active player, sleeper) from-to visibility check, meaning we'd need to eventually validate if we should send data to the sleepers. We also intermixed player state checks throughout the logic, which would lead to us generating more pairs if we discovered half-way through pair filtering that we need to revalidate things. All-in-all, it was still doing too much work.

I've applied the following changes:

- I've simplified player/sleeper state checks further, as some state can be inferred, and added result caching

- When generating pairs we now always end up with (player-or-sleeper, player) from-to pairs.

- Because of above, I ripped out additional downstream checks to see if pair.to is ever not-player or should ignore

This should bring down hash lookups by ~75% & reduce pair count by ~50% (rough guess). I hope this'll bring server occlusion times down to ~2ms per frame for 330p case.

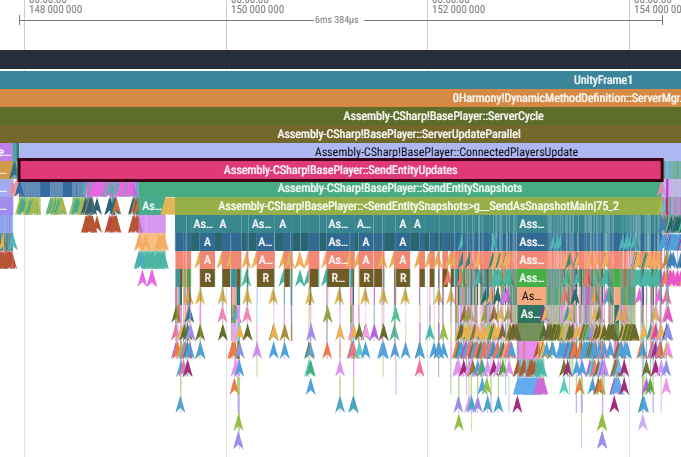

Player Processing - Lock Contention

Another obvious problem that became apparent on snapshots. Our multithreaded player update processing was slow, as slow as serial processing.

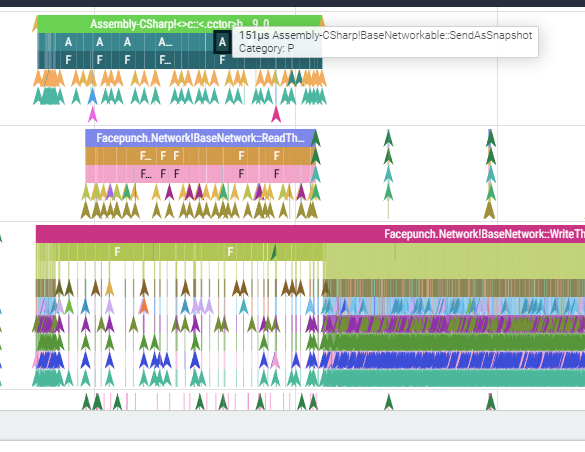

All threads, including main, got bottlenecked on 1 lock - you can see how the purple WriteThread bellow has gaps where it doesn't do anything right as above green worker thread is pushing more work:

We rely on pooling to reduce allocations. But to do that safely, our pool uses a lock per type - this allows us to be fast in single-thread cases. But it doesn't scale in a many-thread scenario. It was rather bad, to the point where total time across threads for pushing entity snapshots was 100ms.

Instead of modifying our pool implementation (as that's used in our entire codebase), we now pre-allocate all necessary state on main thread (avoiding expensive locks). I'm hoping it'll speed up the processing and bring it closer to 3ms for comparable population(330p).