All images are clickable

Network Message Leak

As part of public testing of the Server Profiler's memory tracking, a high-pop community server has shared their performance snapshot during a "memory leak" scenario. The graph at the bottom revealed that our thread for decrypting messages suddenly started to allocate quite a lot - at a rate of about 10 MB/second.

This was unexpected, as on our official servers we didn't see such behaviour. I've investigated and found a `gotcha` in the code of ConcurrentQueue that comes with Mono - it's now been fixed.

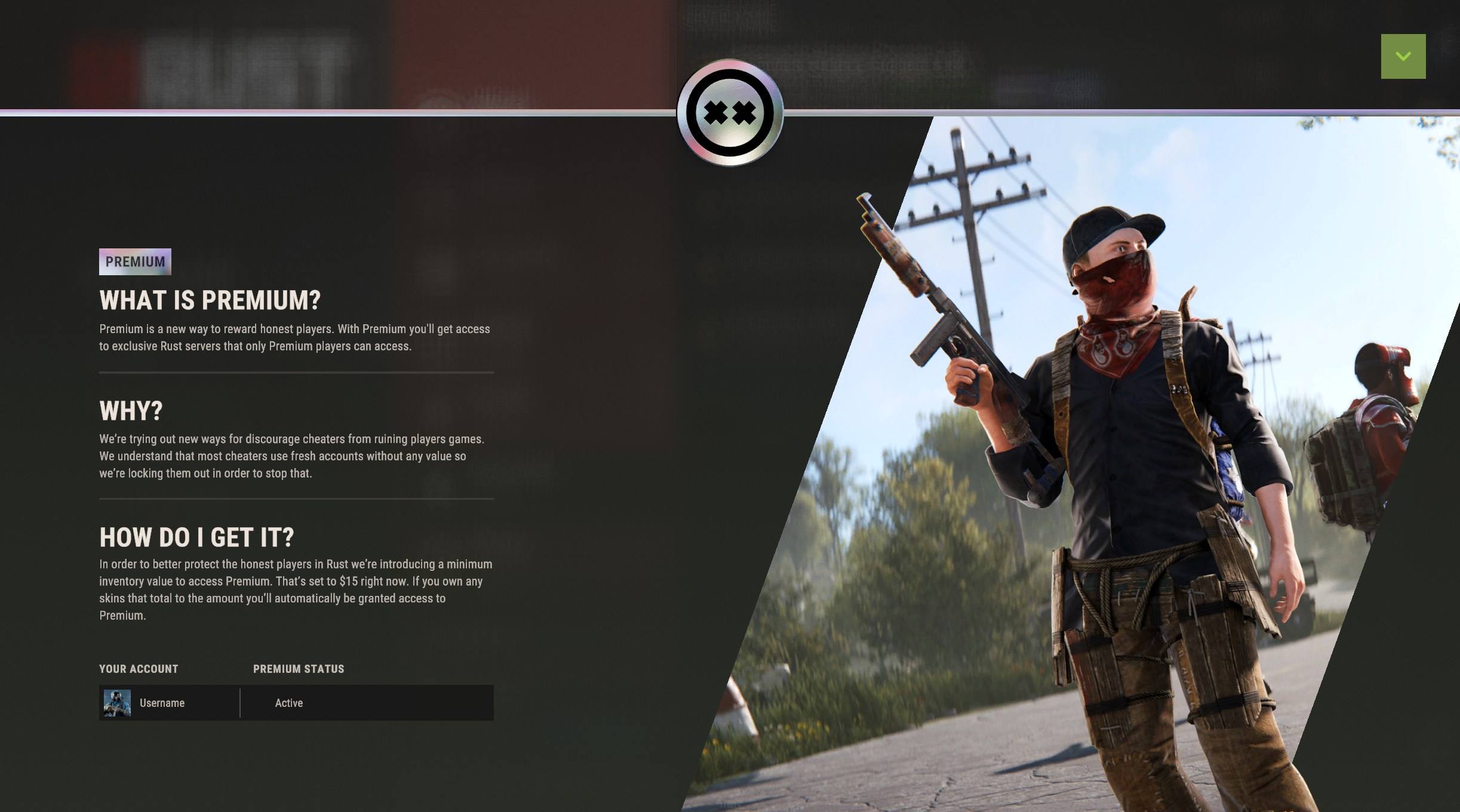

Analytics Optimizations

Another thing that popped out of Server Profiler's memory tracking feature is that our analytics processing logic can be hungry for memory. It was a surprise, considering we were using routines that were designated as non-allocating - but turns out formatting floats are a silent exception to that set. The above screenshot was taken on an official 110-player server, and it shows that analytics contributes to 15605 allocations, totalling about 1 MB of garbage.

I've removed the unexpected allocations around floats and around using async tasks to perform the upload. I'm hoping this'll reduce the pressure on Garbage Collection processes and trigger them less frequently on a high-pop server.

Text Table Optimizations - Testing on Aux2

Another unexpected source of slowness and allocations is Text Table - a utility class that allows to gather data and spit it out as a formatted table (or JSON), making it human-readable. This utility is used both by us internally, as well as modders externally.

I recently saw this case during our internal server profiling - running server.playerlistpos on 200-person server:

Not only it allocates aggressively(2.7k allocations), it also ends up being slow - it took 5 milliseconds to process 200 players.

I've rewritten the implementation to allow more efficiently working with Text Table - it now has a number of opt-in mechanism and hints you can give to speed up it's internal operations. I've additionally eliminated 99% of allocations we created during the process. Additionally, it still generates binary matching output, meaning it shouldn't* break any of existing processes/mods.

I've updated the above playerlistpos routine to use all the options, and now it looks like this:

It can handle 300 players at 0.8ms while only needing 18 allocations - a win on all fronts.

*It's currently undergoing testing on the Aux2 branch to confirm I haven't actually missed anything. Depending how the testing goes, it'll either land in the next big update or during one of the fix patches.

Batched Player Processing - Ongoing work

Majority of my time has been spent on prototyping a different way to process all the connected players to the server. Here's how it looks like now:

We spend about 19ms to process all 200 connected players in a server tick. Part of it is profiling overhead (there's a lot of data to record), but part of it is the amount of work we need to do. And we're also doing it in potentially-sub-optimal manner:

- First, player runs its own water query to determine if it's underwater

- Water queries in turn might run physics queries or might look into our acceleration structures

- Then the player need to process tick state - this can either call physics queries or lookup memory in the game.

- Then, after we processed tick state, we might want to gather analytics or other processing - this will require recaching player state, including water queries.

- Now we also update Player Occlusion as part of the steps - so we have to do occlusion grid lookups.

As you can see, each player is processed by calling individual, isolated routines(each different color on screenshot is a unique routine), each of which might need to work with different memory causing

cache thrashing loosing us performance. Additionally, Unity has support for running physics queries in parallel, which we can't utilize if we run these checks sequentially. Lastly, there's a good number of workload that could be converted to Burst jobs and also parallelized - which we again can't utilize to full extent in the current architecture.

My work is focused towards rewriting player processing logic to do all operations in batches(closer to ECS-style processing):

- Pack all state into compact arrays to reduce cache thrashing

- Replace processing routines that work on single instances to work on sets of instances (water queries, for example)

- If we do physics queries, organize them into parallel jobs

- Convert managed code to Burst jobs that can work on batches.

So far the results of my prototype showed that we lose about 18% of performance doing things the naive way - using full server demo recording of a 56 player server, the routine of batching water queries cost us 130 microseconds instead of 110 original.

The good news is that we lost 18% before I started focusing on optimizing the logic, applying Burst processing and parallel jobs(section 4 is example, section 3 is candidate) and reducing data organization overhead(section 2). In my eyes, the prototype is successful as it shows that we gain access to heavy duty processing tools. I'm hoping to reach testing phase of batched water queries in the next patch cycle.